A/B testing marketing emails

Kentico EMS required

Features described on this page require the Kentico EMS license.

When preparing an email feed for marketing purposes, it can be difficult to predict how recipients will react to important elements, such as the email subject or the primary call to action. You may also want to find out which option out of several alternatives will have the most positive effect. Optimizing your marketing emails via A/B testing provides a possible solution to these problems.

A/B testing allows you to create multiple different versions of each email called variants. You then mail out the variants to a test group of recipients, typically a portion of the marketing email’s full mailing list. The best version of the email can be identified based on the tracking statistics measured for the test group, and then sent to the remainder of the recipients. The winning variant can be either selected automatically by the system according to specified criteria or manually after evaluating the test results.

A/B testing works best for marketing emails that have a large number of recipients. With too small a testing group, the results may be heavily affected by random factors and will not be statistically significant.

Prerequisites

On-line marketing must be enabled for your website:

- Open the Settings application.

- Navigate to On-line marketing.

- Select Enable on-line marketing.

- Click Save.

A/B testing variants of marketing emails are evaluated based on the actions performed by recipients, so both types of Email tracking need to be enabled for tested emails:

- Open the Email marketing application on the Email feeds tab.

- Edit () an email campaign or a newsletter.

- Switch to the Configuration tab.

- Make sure Track opened emails and Track clicked links are enabled.

- Click Save.

Creating A/B tests for marketing emails

You can define A/B tests in the Email marketing application, either directly when composing new marketing emails, or when editing existing marketing emails on their Email builder tab:

Create a new email or edit an existing one.

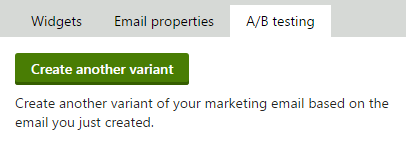

Switch to the A/B testing tab of the Email builder.

Click Create another variant.

Enter a Name for the variant.

Click Create.

- The content of the original variant is used as a starting point that you can modify as required. The variant uses the same template as the source email and retains the widget zone content of the original.

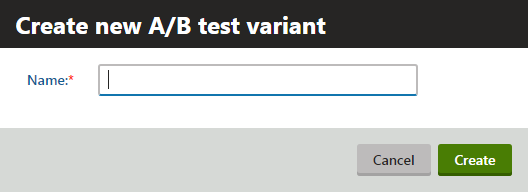

Now that you have defined an A/B testing variant for the email, Email builder displays the A/B testing variant drop-down list. You can use the drop-down list to switch between individual variants, including the original email. The A/B testing variant drop-down list shows the name of the currently selected variant. You can manage the A/B test variants using the following actions:

- Create another variant – creates another variant of the original email. You can add any number of variants.

- () Remove variant – deletes the currently selected variant.

You can modify the settings and content of variants just like when editing standard emails. Each variant may have a different subject, email template, editable region content etc. This allows you to test any variables that you need.

Sending A/B test marketing emails

Once you define all of the email’s variants, you need to configure how the test is sent out and evaluated. You can do this on the Send tab of the email that you created.

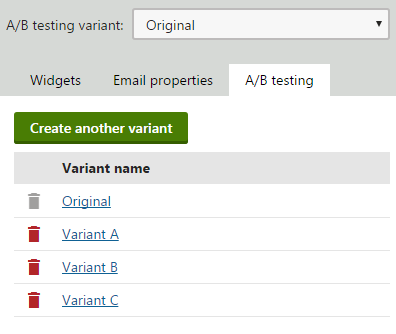

Define the size of the recipient test group by using the slider in the upper part of the page.

- By moving the slider’s pointer, you can increase or decrease the number of recipients that will receive the variants of the marketing email during the testing phase.

- The slider automatically balances the test group so that each variant is sent to the same amount of recipients.

- The overall test group size is always a multiple of the total number of variants created for the email.

- The remaining recipients who are not part of the test group will receive the variant that achieves the best results (i.e. the winner) after the testing process is complete.

Using a full test group

You can set up a scenario where the test group includes 100% of all recipients. In this case, the A/B test simply provides a way to evenly distribute different versions of the marketing email among the recipients and the selection of the winner is done for statistical purposes only.

- In the Schedule of the mail-out section below the slider, specify when to send out individual email variants to the recipients from the corresponding portion of the test group.

- To schedule the mail‑out, enter the required date and time into the field below the list (you can use the Calendar selector or the Now link) and click OK.

- You can either do this for (all ) variants or only for those selected in the list.

- If the mail-out time is the same for multiple variants, the system sends them in approximately 1-minute intervals between individual variants.

- In the Method of selecting the winning variant section, select how the A/B test chooses the winning variant:

- Manually – the A/B test does not select the winner automatically. Instead, the author of the email (or other authorized users) can monitor the results of the test and choose the winning variant manually at any time.

- Automatically based on unique opens – the system automatically selects the variant with the highest number of opened emails as the winner. This type of testing focuses on optimizing the first impression of the email feed, i.e. the subject of the emails and the sender name or address, not the actual content.

- Automatically based on unique clicks – the system automatically chooses the winner according to the amount of link clicks measured for each variant. Each link placed in the email’s content is only counted once per recipient, even when clicked multiple times. This option is recommended if the primary goal of your email feed is to encourage recipients to follow the links provided in the email.

- When using an automatic selection option, set the duration of the testing period through the Select the winning variant after settings.

- This allows you to specify how long the system waits after the last variant is sent out.

- When the testing period is over, the test selects the winner and sends it to the remaining recipients.

- Click Send.

- If you only wish to save the configuration of the A/B test without actually starting the mail-out, click Save instead.

Evaluating A/B tests

The testing phase begins after the system sends out the first variant.

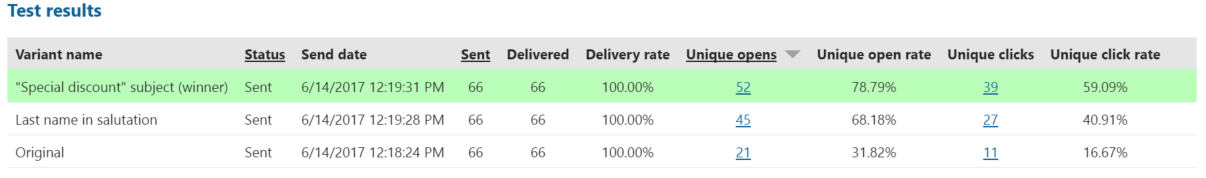

If you switch to the Send tab, the test group slider is now locked. However, you can view the email tracking data measured for individual variants in the Test results section. The current tracking statistics are shown for each variant, specifically, the number of Sent and Delivered emails, the number of Unique opens and the number of Unique clicks performed by recipients. By clicking on the Unique opens and Unique clicks numbers, you can open a dialog with the details of the corresponding statistic for the given variant. Together with the absolute numbers, also the Delivery rate, Unique open rate, and Unique click rate are shown as percentage values. It is also possible to reschedule the sending of variants that have not been mailed yet using the selector and date-time field below the list.

You can change the winner selection criteria at any point while the testing is still in progress.

Click Select as winner to manually choose a winner (even when using automatic selection). This opens a confirmation dialog where you can schedule when the winning email variant is sent to the remaining recipients. If you specify a date in the future, you will still have the option of choosing a different winner during the interval before the mail-out.

Special cases with tied results

If a draw occurs at the end of the testing phase (i.e. the top value in the tested statistic is achieved by multiple email variants), the test postpones the selection of the winner evaluates the variants again after one hour.

In certain situations, you may need to choose the winner manually even when using automatic selection. For example, if you are testing the number of opened emails and all recipients in the test group open the received email.

Once the test is concluded and the winner is decided, the given variant is highlighted by a green background. At this point, the system mails out the winning email to the remaining recipients who were not included in the test group. You cannot perform any actions with the test except for viewing the statistics of the variants.

You can monitor the overall basic statistics of A/B tested emails right on the Send tab of the email. If you want to see more detailed statistics, switch to the Reports -> Overview tab, where you can find statistics for all variants and the remainder together. When viewing the click-through data on the Reports -> Clicks tab, you may use the additional Variants filter to display values for specific variants. The opened email data on the Reports -> Opens then also enables you to display all variants together. The statistics of the winning variant include both the corresponding portion of the test group and the remainder of the recipients who received the email after the completion of the testing phase.